Multimodal Relational Interpretation for Deep Models

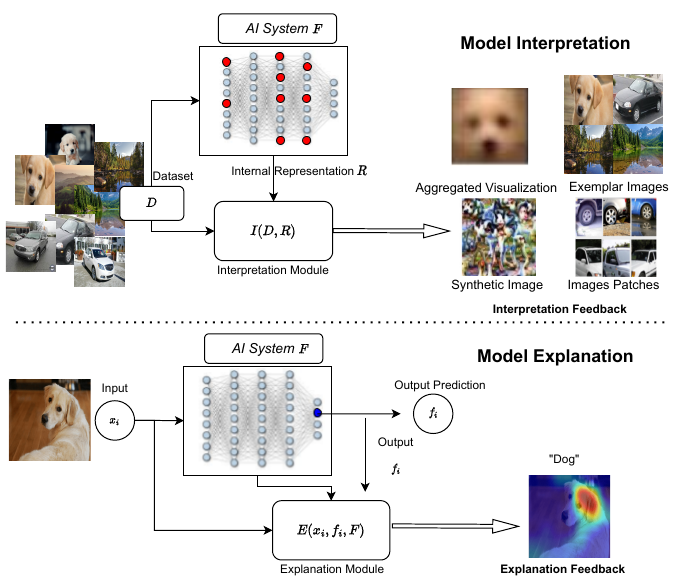

Interpretation and explanation of deep models is critical towards wide adoption of systems that rely on them. Model interpretation consists on getting an insight on the information learned by a model from a set of examples. Model explanation focuses on justifying the predictions made by a model on a given input. While there a is a continuously increasing amount of efforts addressing the task of model explanation, its interpretation counterpart has received significant less attention. In this project we aim taking a solid step forward on the interpretation and understanding of deep neural networks. More specifically, we will focus our efforts on four complementary directions. First, by reducing the computational costs of model interpretation algorithms and by improving the clarity of the visualizations they produce. Second, by developing interpretation algorithms that are capable of discovering complex structures encoded in the models to be interpreted. Third, by developing algorithms to produce multimodal interpretations based on different types of data such as images and text. Fourth, by proposing an evaluation protocol to objectively assess the performance of algorithms for model interpretation. As a result, we aim to propose a set of principles and foundations that can be followed to improve the understanding of any existing or future deep complex model.

Acknowledgements

This project was supported by the UAntwerp BOF-DOCPRO4-NZ Grant id:41612.

Publications

SVEBI: Towards the Interpretation and Explanation of Spiking Neural Networks

Jasper De Laet, Hamed Behzadi, Lucas Deckers, José Oramas M.

ECML-PKDD 2025

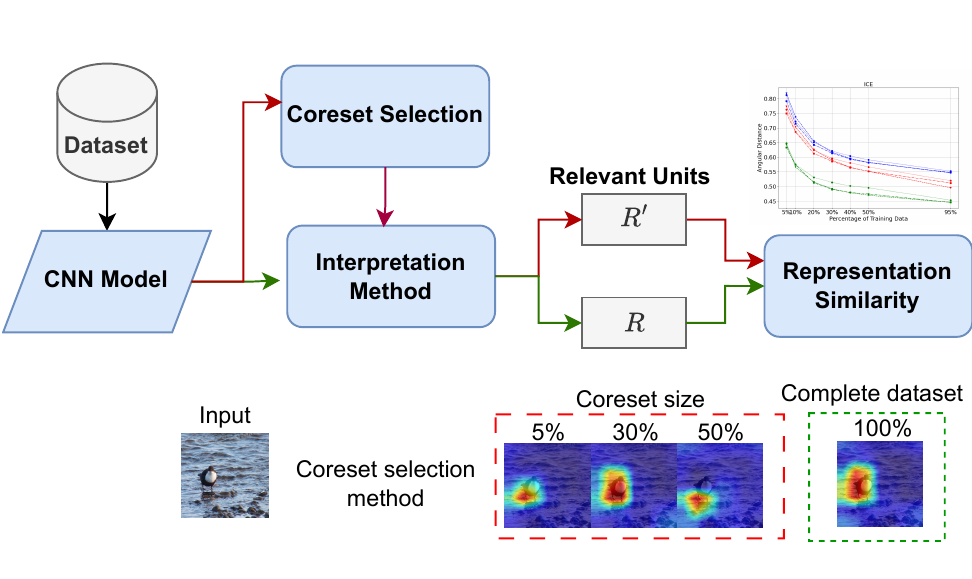

Deep Model Interpretation with Limited Data : A Coreset-based Approach

Hamed Behzadi-Khormouji, José Oramas M.

Technical Report

Pre-print (arXiv:2410.00524)

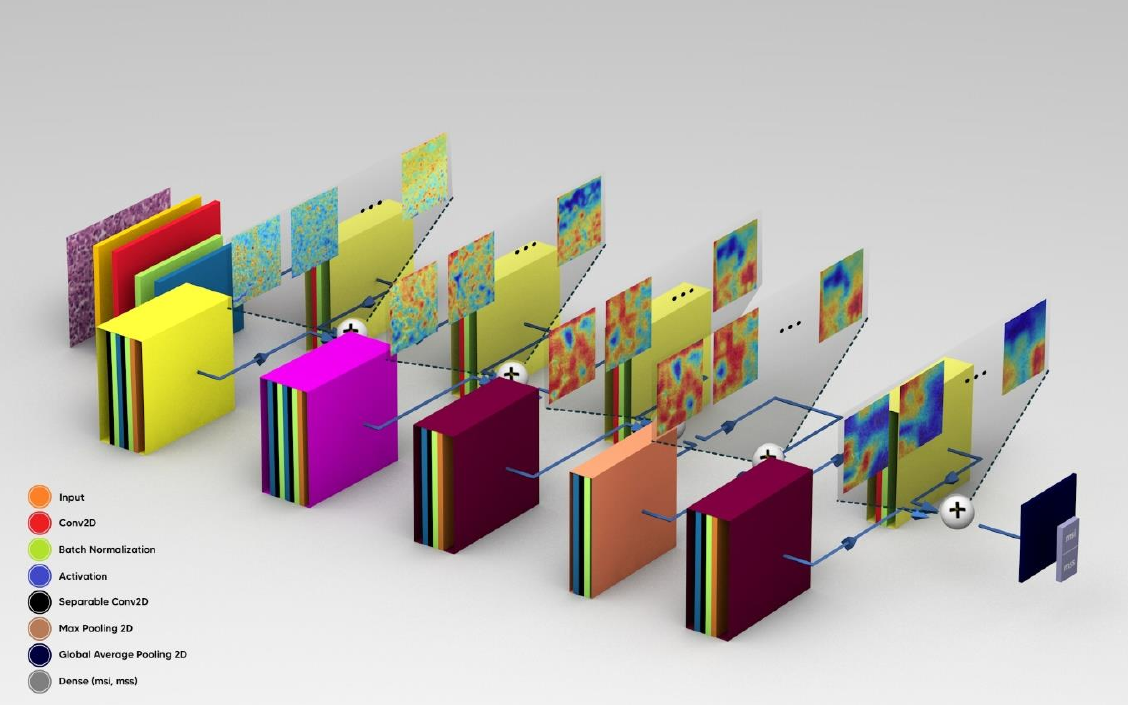

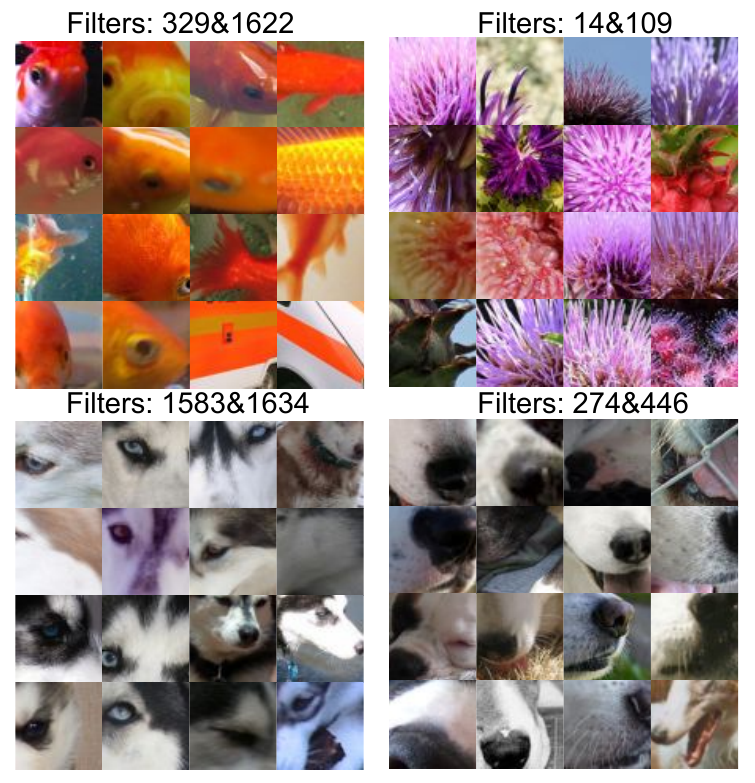

FICNN: A Framework for the Interpretation of Deep Convolutional Neural Networks

Hamed Behzadi-Khormouji and José Oramas M.

Technical Report

PDF (arXiv:2305.10121)

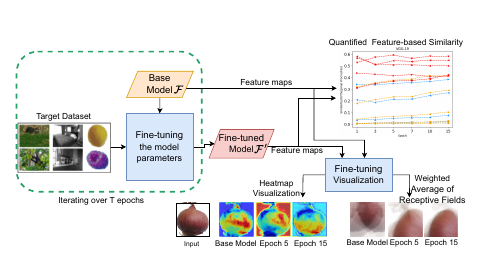

An Empirical Study of Feature Dynamics During Fine-tuning

Hamed Behzadi-Khormouji, Lena De Roeck, José Oramas M.

ECML-PKDD Workshops (XKDD) 2024 (oral)

PDF

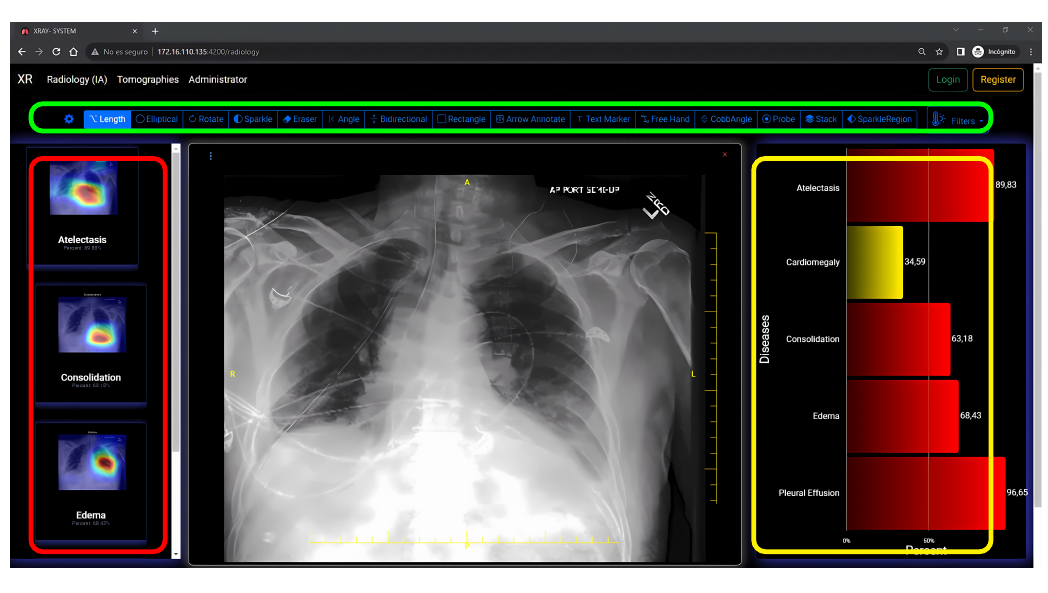

Deep learning for healthcare : web-microservices system ready for chest pathology detection Source

Sebastian Quevedo, Hamed Behzadi Khormouji, Federico Dominguez, Enrique Pelaez

CIST 2024

PDF

Automatic extraction of lightweight and efficient neural network architecture of heavy convolutional architectures to predict microsatellite instability from hematoxylin and eosin histology in gastric cancer

Habib Rostami, Maryam Ashkpour, Hamed Behzadi-Khormouji et al.

Neural Computing and Applications (NCA) 2024

PDF

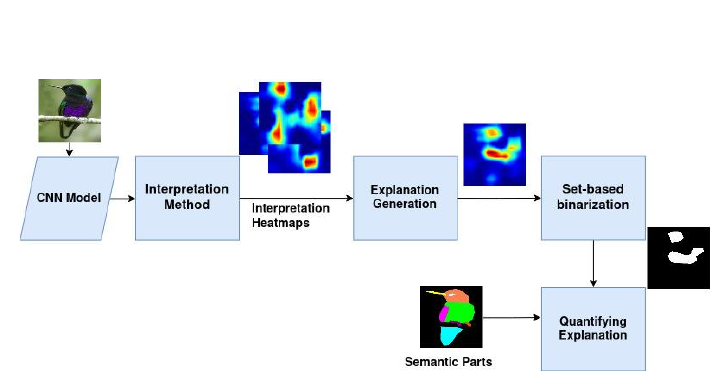

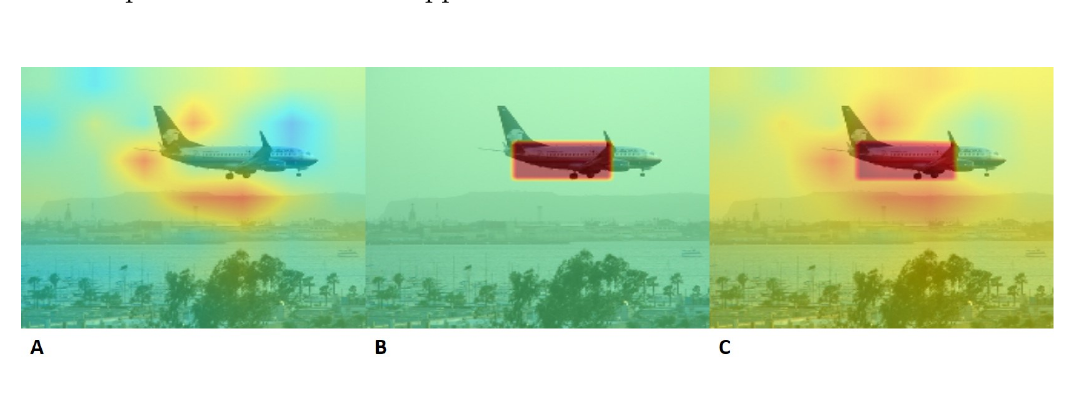

A Protocol for Evaluating Model Interpretation Methods from Visual Explanations

Hamed Behzadi-Khormouji, José Oramas M.

WACV 2023

PDF |

Supp.Material |

Bibtex

Interpreting Deep Models by Explaining their Predictions

Toon Meynen, Hamed Behzadi-Khormouji, José Oramas M.

ICIP 2023

PDF (author version) |

Poster

Enhancing performance of occlusion-based explanation methods by a hierarchical search method on input images

Behzadi Khormouji Hamed, Rostami Habib

ECML-PKDD Workshops (AIMLAI) 2021

PDF (author version)

Datasets

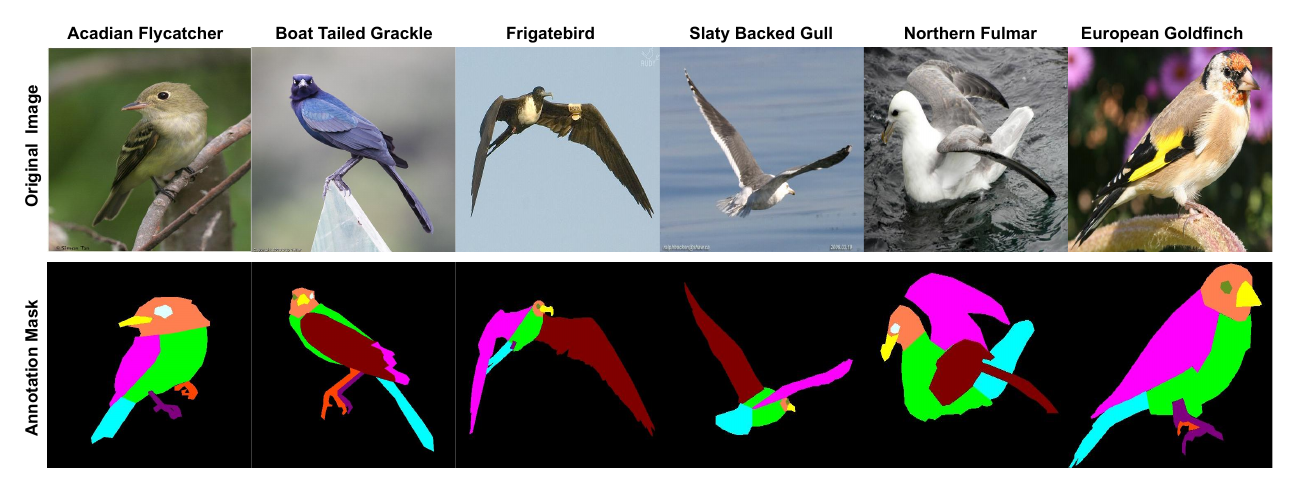

CUB70-PartSegmentation

This dataset is a subset derived from the first 70 classes of the CUB-200 dataset. For this subset we manually produced pixel-wise annotation masks for 11 parts including head, right eye, left eye, beak, neck, body, right wing, left wing, right leg, left leg, and tail. Worth noting, there is no spatial overlap among the parts in both datasets. The train set contains 70 classes with 30 images per class, and test set contains 1976 images in total for the 70 classes.

Please refer to our paper for the performance of several methods on the CUB70-PartSegmentation dataset.